New AI Powers for Music, Film, and Our Planet

Artificial intelligence is transforming most aspects of our life. The latest news indicates that AI is taking huge strides in the production of music, the creation of 3D worlds, movie direction, and even assisting in the security of our planet. OpenAI, Tencent, and Google are the companies that are at the forefront of these changes. The new equipment is bringing daily existence tasks closer and smarter. They also contribute to the solution of the world problems. We can take a glimpse at how AI is transforming these fields currently.

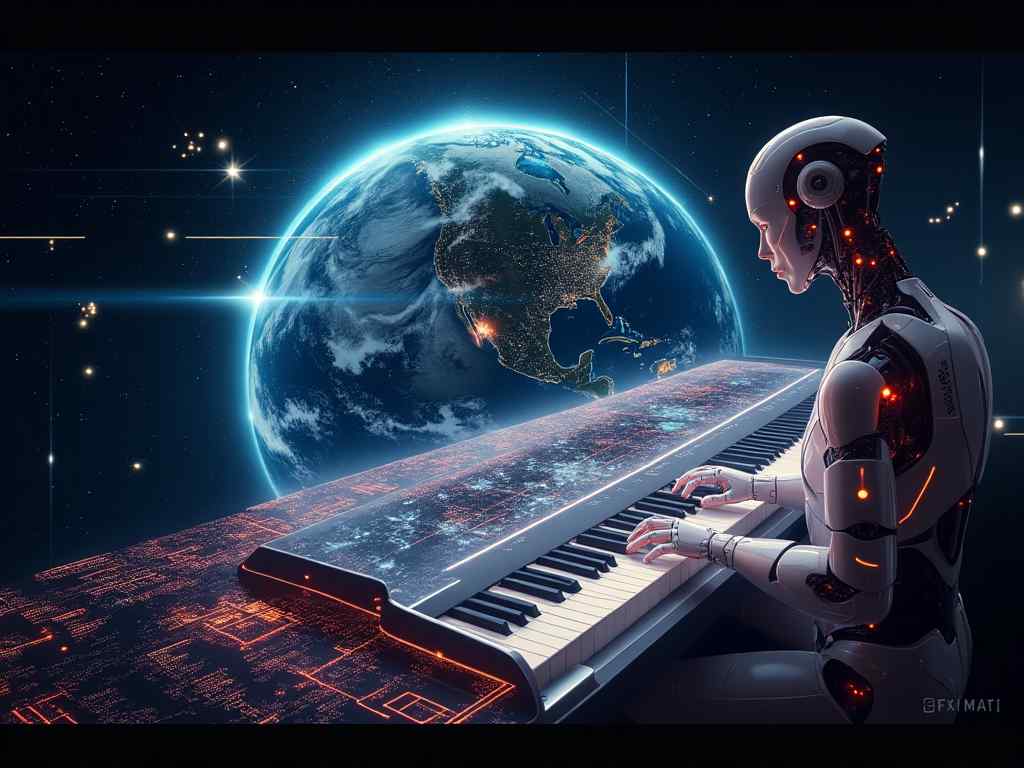

OpenAI’s AI Music Composer

OpenAI is supposedly developing another music generating AI. It is a very detailed AI, which is comparable to the studies that students receive at Juilliard. It has the ability to generate emotional songs using text or voice cues. As an illustration, you might enter “sad piano music, soft rain. The AI would then create a complete composition of music. You would also be able to post your voice and the AI would add all the music to it in a few seconds.

OpenAI used Juilliard students in order to train this system. These students assisted in labelling professional music scores. This did not only teach the AI notes. It got to know the performance of real musicians. This entails the phrasing of the music, timing and dynamics. These facts make music have its real impression.

This is not the first attempt of OpenAI with music AI. They had been working on a system in the years preceding ChatGPT and named Jukebox. Jukebox had the capability of imitating various artists and blending styles of music. At present, however, OpenAI goes back to music with an improved method. This new tool can be compatible with other OpenAI tools, such as ChatGPT or Sora. Video creators could create images and music on the same platform.

This music AI is yet to be released. But it is undergoing severe testing, sources say. OpenAI is a highly desirable company. This music generator is not a small project, it is a core project. It is meant to transform the mindset of musicians, filmmakers, and content creators to produce original sounds without having to use a complicated music software. Reading about this development on TechCrunch may require more information.

Dia AI Browser for Mac

Recently, a new AI browser Dia has been released on macOS. Dia was developed by the Browser Company. It is now downloadable by anyone who has an Apple Silicon Mac. This is the release of its test period. Dia is a web browser which is lightweight and AI driven. It is intended to enable working more quickly and intelligently on a daily basis.

Dia creates an assistant on your browser tabs. This assistant is able to read the open pages. It is also able to comprehend information across them and act immediately.

How Dia Helps You Work

Dia has numerous useful features:

- Summarize articles: It has the ability to summarize long articles in a fast manner.

- Compare items: You can check prices and features and compare items in case you have multiple product listings open. As an illustration, in the case of opening two tabs on Airbnb, Dia will compare prices, amenities, and cancellation policies.

- Write emails: The artificial intelligence can assist you with writing emails.

- Clean up text: It is capable of editing text and does not disrupt your work.

- Minor automations: Dia is able to extract vital points out of documents. It may also be used in responding to customer messages. It may even give you the advice prior to making an online purchase.

Context awareness is among the important characteristics of Dia. It knows what you are watching on. Since the assistant will be required to view what you are working on, then privacy is also key. Users are able to choose completely what their AI may read. The browser will automatically secure sensitive websites such as banking websites or healthcare websites.

The introduction of Dia is timely. M series Macs are also adding AI elements on Apple. The AI assistants are also available in other browsers such as Arc, Brave, and Opera. Nevertheless, Dia emphasizes experiencing and cognizing page information and arguments on numerous tabs in real-time. This is not similar to mere chat or briefs.

At the moment, Dia supports only the M1 and the newer Macs. It is under development in a windows version. It does not have a paid version and is entirely free to use. Dia may seem like a real internet assistant to those users who have to deal with numerous tabs every day. Things you should know about the Dia AI browser on FindArticles.

Tencent 3D Reconstruction Real Time

Tencent unveiled Hunan World Mirror 1.1 or World Mirror. It is an effective 3D reconstruction model. It runs in real time on one graphic card. The model has various forms of input. These may contain one photo, several photos of varying angles or even video.

World Mirror creates 3D information in detail based on this input. It can produce:

- Point clouds (collection of information points in the 3D space).

- Multi-view depth (distances of the objects in the various camera views).

- Camera parameters (information about the camera which captured the photos).

- Surface normals (the direction which a surface is facing).

- 3D Gaussian splats (meaning of representing a 3D object to render it).

In case you give further information, such as camera settings that are calibrated (or sensor depth maps) the model will use this information. It integrates these facts by a mechanism of multimodal prior prompting. This process passes all the data via a transformer system. Then, each geometric output is created by unified decoders.

Appreciating Limitations and Uses

The model does not have enough information to make a guess on what is not in sight because of just one image. Therefore, the blank spaces where the photograph fails to capture the scene may appear. When you feed it with several images or video it forms a far more coherent 3D structure.

On Hugging Facebook, Tencent offers a demonstration. Their website also has model weights available, and has a GitHub link to the complete system. The 1.1 release is an improvement of the previous one. It is no longer restricted only to zero text and one image input.

It has to be able to run in real-time using one GPU. This aspect aids the teams that desire to apply this technology in practical contexts. This includes:

- Robotics: To have a cognition of their surrounding.

- AR try-ons: To virtual try-on in augmented reality.

- Scene cognition: To have fast understanding of the surrounding environment on the part of the device.

World Mirror is much better than the other systems in camera and point map estimation. It also combines tasks not combined by other systems. These are surface normals and 3D Gaussian Splatting to generate new views. This implies that you are able to create new views without any additional measures. Visit the Tencent 3D model in their Hunyuan site.

Artificial Intelligence Cinema and Live Video

Two other new AI tools are significant, in case your work is more of telling stories than creating 3D models. HoloCine and Krea are making AI video sophisticated.

HoloCine: Story Directing with AI Memory

The HKUS and Ant Group long video model is an open-source project named HoloCine. It develops multi-shot narratives. These narratives maintain the continuity of characters, props and settings in numerous shots. The reason is that it knows about the rules of filmmaking. It is aware of shot-reverse shot, alterations in camera scale and controlled camera movements. It is aware of the fundamentals of film direction.

The model is also capable of recalling details between shots. As an illustration, an insignificant detail such as an embroidered patch on the back of a character remains the same throughout the entire film. This repetition is essential to realistic narration.

There are various versions of HoloCine:

- Full attention 14B version of best quality.

- A 14B sparse intershot attention version with higher results but with minor differences in stability.

- Smaller 5B models to support systems with lower memory.

HoloCine under the hood has VAE components of other AI models such as the VAE of one 2.2 and a T5 text encoder. The team recommends Flash Attention 3 to be used speedily. In case of this, the code will automatically employ Flash Attention 2. Although slower, it will still be in operation.

This is due to the fact that the immediate format of HoloCine can be highly beneficial. You provide it with a headline of the entire scene including moods of characters. Then, you give captions of each shot. It is also possible to add certain cut frames. This provides the ability to control the direction without having to manipulate each and every frame.

The developers are transparent with regard to the trade-offs. Complete concentration is the most quality and consumes the higher computing power. Sparse intershot attention is quicker however with small stability expenses. They also compare it to commercial models such as Cling and Sora 2 multi-shot consistency. HoloCine is open-source, meaning that you may use it in your projects. You do not have to wait until you receive a private test invitation. HoloCine is available on GH.

Krea Live: Live Video Generation

Another method of creating video is provided by Krea: real-time generation. Krea realtime was open-sourced Krea Realtime. It is a 14B auto-regressive video model. It was made of Juan 2.114b by the process known as self-forcing. The technique transforms a diffusion model into a sequential frame prediction model.

Its speed is the most remarkable one. It is capable of producing 11 frames/sec on a single Nvidia B200 card that requires only four processing steps. Although it is real-time, standard hardware is not required, and the hardware is not user-friendly. B200 GPUs are very expensive (approximately 30,000 -40,000). This implies that Krea Realtime targets professional studios or labs.

Krea Realtime has been designed with special features to maintain low error rates in long-runs. It also possesses memory optimizations of auto-regressive video diffusion. The interaction is the major advantage. Prompts can be changed during recording of the video. You can restyle it on the fly. The initial frame takes approximately a second to see. You are even able to stream webcam video or plain designs on video-to-video editing.

This does not only imply that it is fast, but it will respond in a manner that enables creative feedback. It is actually usable in quick testing and adjustment of your ideas. Demos and technical information on Krea RealTime can be found on Hugging Face.

Google Earth AI for Global Forecasting

Google is improving its Earth AI and making it more accessible. More than two billion people are already receiving the assistance of this system to forecast floods. It provides wildfire, cyclone and air quality alerts. In the 2025 California wildfires it alerted approximately 15 million people in Los Angeles. It also assisted them in locating shelters in maps.

The most recent update presents the rationale of Gemini to the system. It also proposes geospatial reasoning. This connects various AI models on Earth. These models examine weather, density of population, and satellite pictures. This will enable professionals to pose complicated questions and receive a complete answer.

Rather than, asking where shall the storm strike? you are now able to say the question, which communities are the most at risk, and which roads and clinics are at risk? The system offers a single solution.

Gemini Features of Google Earth

The identical skills have become integrated with Google Earth. Gemini with features in earth allows analysts to identify objects and patterns in satellite imagery with normal language. For example:

- A water corporation would get found with dried up sections of rivers. This assists them in predicting dust storms in the case of drought and give the communities warning earlier.

- Analysts will be able to identify harmful algae blooms in a bid to conserve drinking water sources.

This is a new feature to be released soon to Google Earth Professional and Professional Advance users in the United States. The limits of these features are already increased in Google AI Pro and Ultra subscribers.

Earth AI models and imagery, as well as environmental data, are being made available to Google Cloud to trusted testers of businesses. This will enable the businesses to merge their data with those of Google. They are able to make complete workflow of imagery insights.

It is technology that is being used by many partners:

- The WHO Africa office is utilizing Earth AI data in forecasting the risk of cholera in the DRC. This assists in preparing water, sanitation, and vaccination.

- Earth AI is applied on satellite images by Planet and Airbus. Planet maps denudes the times. Airbus monitors vegetation near the power lines and eliminates them to avoid outages.

- At Alphabet, Bellweather X is attempting to enhance hurricane forecasts through Earth AI on behalf of an insurance broker. This assists in settlement of claims at a faster rate and initiating the rebuilding process.

The main idea is clear. The decades of mapping information and the reasoning of Gemini allow making decisions in minutes rather than months. Google is gradually providing these tools. It is first by Earth, then Cloud and lastly by special testing programs such as geospatial reasoning.

The Future with AI

AI now writes music, directs movies and even recreates locations in three-dimension, as well as anticipates threats to the world. These innovations demonstrate a tremendous change in the way we design, perceive and safeguard our world. AI is now a strong collaborator in terms of customized music scores to live disaster warnings. It is making us do and know more than we ever have known.